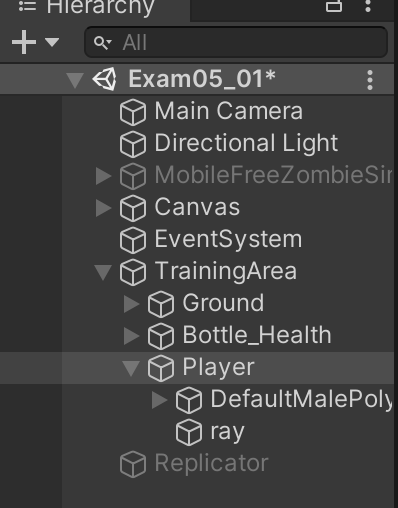

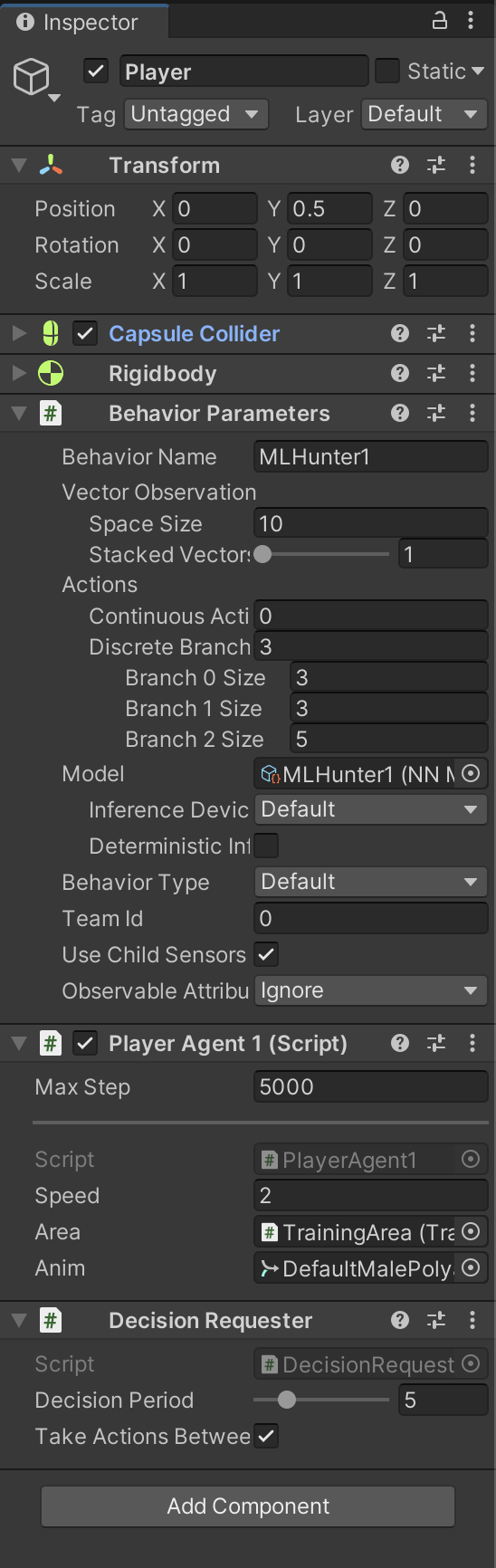

MLZombieHunter 01

Unity3D/ml-agent 2022. 3. 21. 22:20체력 물약 먹기

독물약 피해 체력 물약 먹기

https://github.com/smilejsu82/MLZombieHunter

GitHub - smilejsu82/MLZombieHunter: MLZombieHunter

MLZombieHunter. Contribute to smilejsu82/MLZombieHunter development by creating an account on GitHub.

github.com

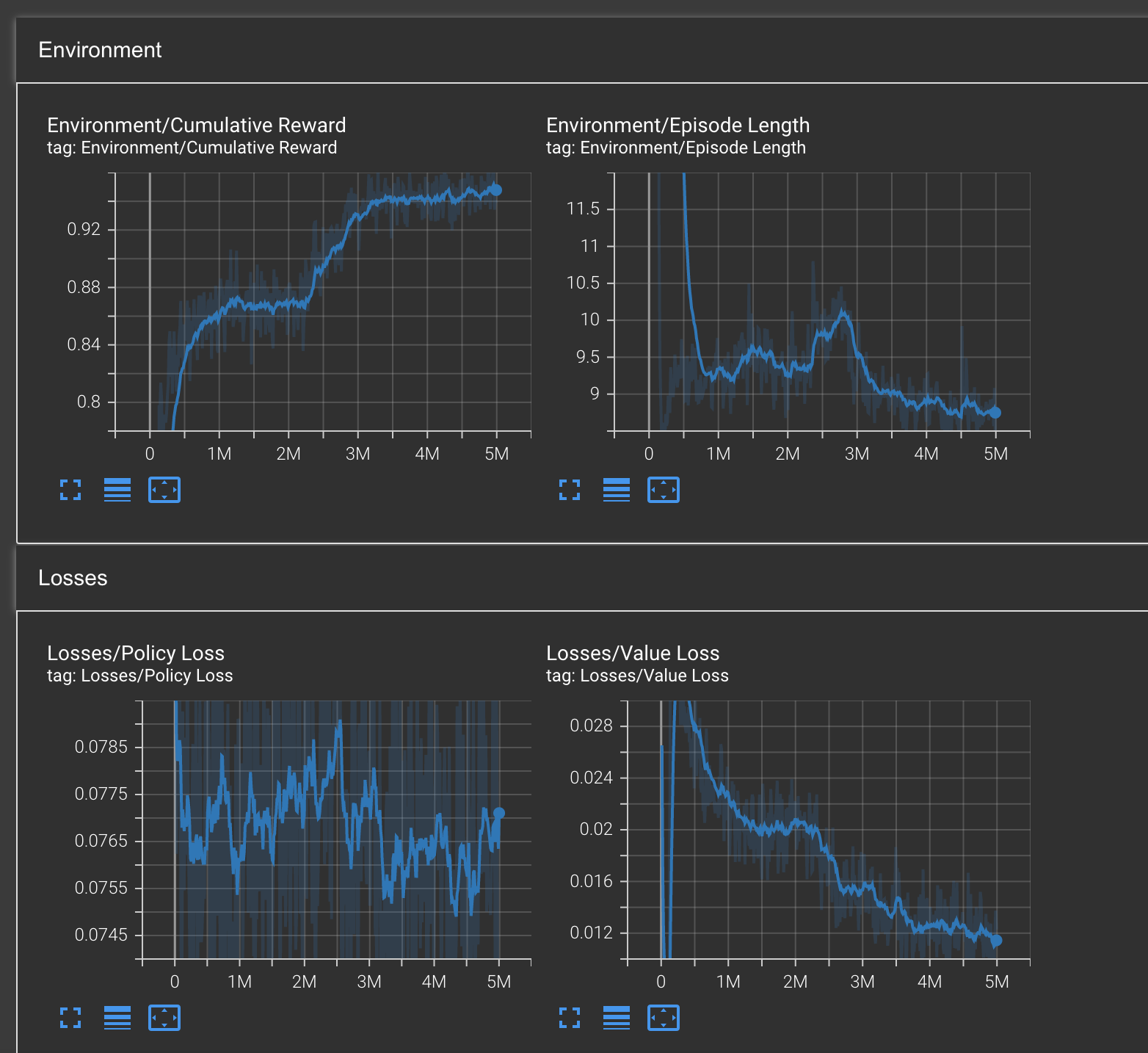

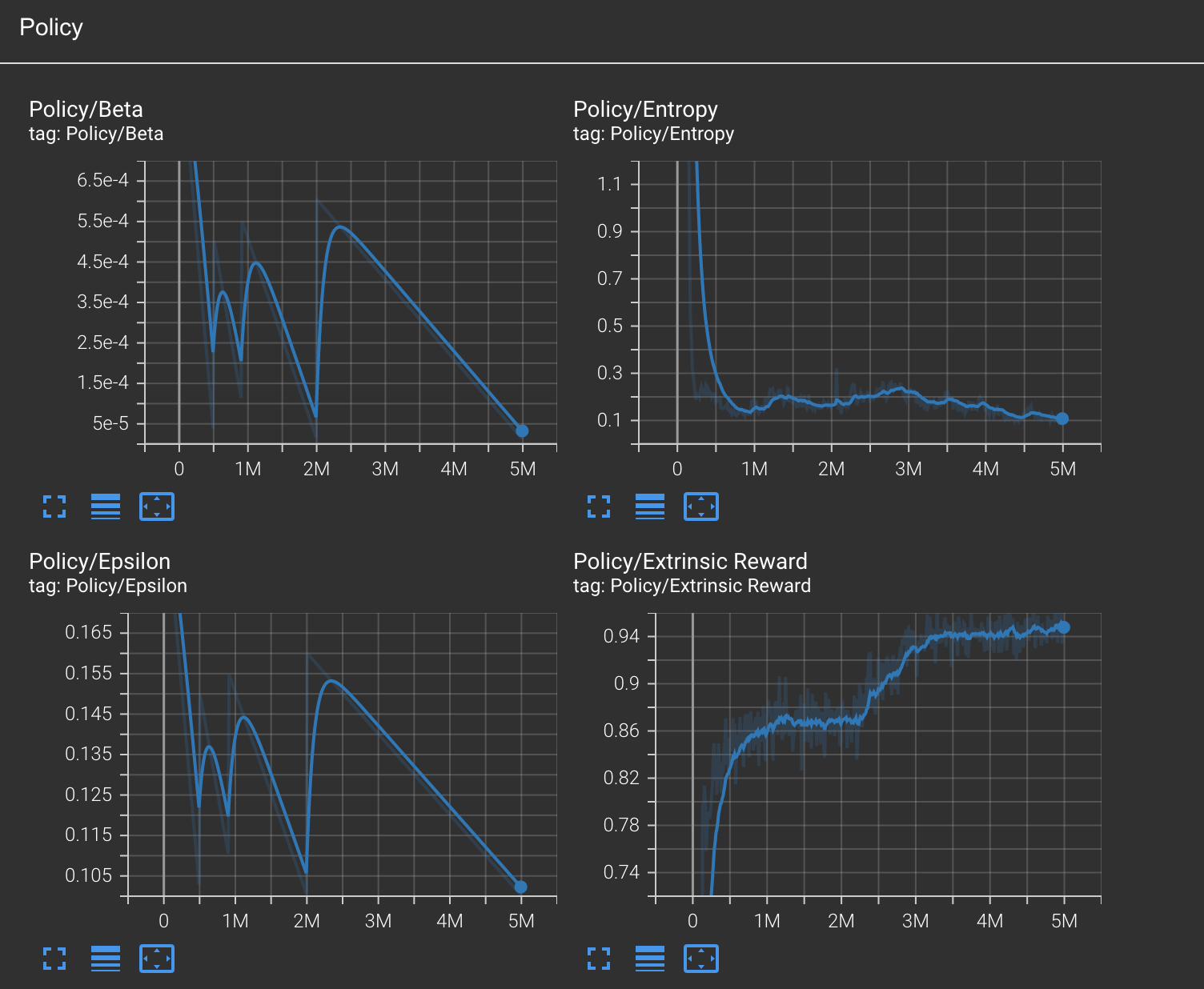

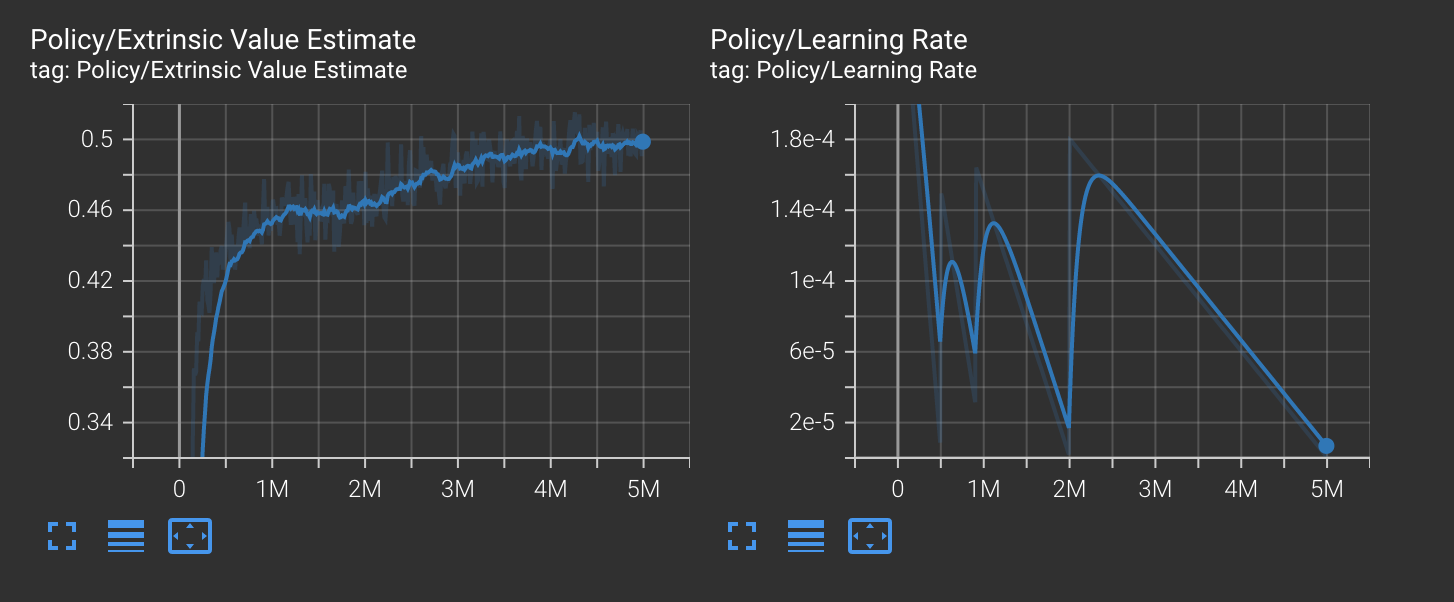

config파일 (.yaml)

behaviors:

MLHunter1:

trainer_type: ppo

hyperparameters:

batch_size: 100

buffer_size: 2000

learning_rate: 0.0003

beta: 0.001

epsilon: 0.2

lambd: 0.95

num_epoch: 3

learning_rate_schedule: linear

network_settings:

normalize: false

hidden_units: 64

num_layers: 1

vis_encode_type: simple

reward_signals:

extrinsic:

gamma: 0.8

strength: 1.0

keep_checkpoints: 5

max_steps: 5000000

time_horizon: 1000

summary_freq: 12000

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using Unity.MLAgents;

using Unity.MLAgents.Sensors;

using Unity.MLAgents.Actuators;

public class PlayerAgent1 : Agent

{

public float speed = 2f;

private Rigidbody rBody;

public TrainingArea1 area;

public Animator anim;

public override void Initialize()

{

this.rBody = this.GetComponent<Rigidbody>();

}

public override void OnEpisodeBegin()

{

this.rBody.velocity = Vector3.zero;

this.rBody.angularVelocity = Vector3.zero;

this.area.ResetArea();

}

public override void CollectObservations(VectorSensor sensor)

{

sensor.AddObservation(this.transform.localPosition); //3

sensor.AddObservation(area.potionTrans.localPosition); //3

sensor.AddObservation(Vector3.Distance(area.potionTrans.localPosition, this.transform.localPosition)); //1

//facing potion to agent

var c = this.transform.localPosition - area.potionTrans.localPosition;

var nor = c.normalized;

sensor.AddObservation(nor);//3

}

public override void OnActionReceived(ActionBuffers actions)

{

var h = actions.DiscreteActions[0]; //0, 1, 2가 나오게 Heuristic에서 전달

//h를 벡터로

h -= 1; // 0, 1, 2 --> -1, 0, 1

var v = actions.DiscreteActions[1];

v -= 1;

var dir = new Vector3(h, 0, v);

var spd = (actions.DiscreteActions[2] + 1) * 0.5f; //1,2,3,4,5 * 0.5 --> max 2.5

//Debug.Log(dir);

if (dir != Vector3.zero)

{

//var movement = dir * this.speed * Time.fixedDeltaTime;

float angle = Mathf.Atan2(dir.x, dir.z) * Mathf.Rad2Deg;

this.transform.eulerAngles = Vector3.up * angle;

var movement = this.transform.forward * spd * Time.fixedDeltaTime;

this.rBody.MovePosition(this.transform.position + movement);

this.anim.Play("RunFWD_AR");

}

else

{

this.anim.Play("IdleBattle01_AR");

}

if (this.transform.localPosition.y < -1f)

{

EndEpisode();

}

this.AddReward(1f / this.MaxStep);

}

public override void Heuristic(in ActionBuffers actionsOut)

{

var h = Input.GetAxisRaw("Horizontal"); //-1, 0, 1 ---> 0, 1, 2

var v = Input.GetAxisRaw("Vertical"); //-1, 0, 1

var discreteActionOut = actionsOut.DiscreteActions;

discreteActionOut[0] = (int)h + 1; //0: 왼쪽, 1: 안움직임, 2: 오른쪽

discreteActionOut[1] = (int)v + 1; //0: 위, 1: 안움직임, 2: 아래

}

private void OnCollisionEnter(Collision collision)

{

if (collision.transform.tag.Equals("HealthPotion"))

{

//Debug.Log("get potion");

AddReward(1.0f);

EndEpisode();

}

else if (collision.transform.tag.Equals("EndurancePotion"))

{

//Debug.Log("test endurance potion!");

AddReward(-1.0f);

Destroy(collision.gameObject);

EndEpisode();

}

}

}using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.UI;

public class TrainingArea1 : MonoBehaviour

{

//public Button btn;

public Transform potionTrans;

public GameObject endurancePotionPrefab;

private GameObject endurancePotionGo;

public PlayerAgent1 agent;

void Start()

{

//this.btn.onClick.AddListener(() => {

// var rand = Random.value * 8 -4; //[0.0 ~ 1.0] * 8 -> [0.0 ~ 8.0] -4 --> [-4.0 ~ 4.0]

// var tPos = new Vector3(rand, 0.5f, rand);

// this.potionTrans.localPosition = tPos;

// var dis = Vector3.Distance(tPos, this.agent.transform.localPosition);

// Debug.Log(dis);

//});

}

public void ResetArea()

{

var rand = Random.value * 8 -4; //[0.0 ~ 1.0] * 8 -> [0.0 ~ 8.0] -4 --> [-4.0 ~ 4.0]

var tPos = new Vector3(rand, 0.5f, rand);

this.potionTrans.localPosition = tPos;

this.agent.transform.localPosition = new Vector3(0, 0.6f, 0);

//포이즌 포션 못먹고 에피소드 종료 되었을경우 제거

if (this.endurancePotionGo != null)

{

Destroy(this.endurancePotionGo);

}

if (Random.value * 100 <= 50)

{

var randXZ = Random.value * 8 - 4;

var tpos = new Vector3(randXZ, 0.5f, randXZ);

this.endurancePotionGo = Instantiate(this.endurancePotionPrefab, this.transform);

this.endurancePotionGo.transform.localPosition = tpos;

}

}

}'Unity3D > ml-agent' 카테고리의 다른 글

| ./ml-agents-envs is not a valid editable requirement. (0) | 2022.03.21 |

|---|---|

| [ml-agents 2.0] MLDino with GAIL, BC (0) | 2021.11.16 |

| --time-scale (0) | 2021.11.16 |

| [ml-agents] batch size , episode, epoch (0) | 2021.11.14 |

| Training-Configuration-File (0) | 2021.11.11 |